|

|||||

Home > News > Members and Partners News > Cray Computer

September 15, 2017 - “The rapid emergence and adoption of artificial intelligence (AI) techniques like machine and deep learning are a wakeup call: AI will transform the technology landscape and touch almost every industry over the next 10 years” says Tractica, a market intelligence firm that focuses on human interaction with technology. “No domain of computing will escape the transformation, including high-performance computing. “

Cray approach to parallelism and performance include extremely scalable compute, storage and analytics, the Cray systems, software and toolkits help organizations accelerate their machine learning and deep learning projects.

Cray’s unique history in supercomputing and analytics has given us front-line experience in pushing the limits of CPU and GPU integration, network scale, tuning for analytics, and optimizing for both model and data parallelization. Some Cray initiatives and projects that underline our most recent work:

-

At the end of 2016 Cray’s deep learning group and engineers at the Swiss National Supercomputing Centre (CSCS) successfully scaled out the Microsoft Cognitive Toolkit — an open-source, commercial-grade toolkit that trains deep learning algorithms to learn like the human brain — to over 1,024 Cray XC50 supercomputer nodes. http://www.cscs.ch/index.php?id=1662

-

In June 2017 Cray announced the release of Cray® Urika®-XC analytics software suite, bringing graph analytics, deep learning, and robust big data analytics tools to the Company’s flagship line of Cray XC™ supercomputers. The Cray Urika-XC analytics software suite is comprised of: the Cray Graph Engine, the Apache® Spark™ analytics environment, the BigDL distributed deep learning framework for Spark, the distributed Dask parallel computing libraries for analytics, and widely-used languages for analytics including Python, Scala, Java, and R. Cray will provide full support for the software suite. http://investors.cray.com/phoenix.zhtml?c=98390&p=irol-newsArticle&ID=2281841

-

In August 2017 a collaborative effort between Intel, NERSC and Stanford, produced the most scalable deep-learning implementation in the world on a Cray XC40 system with a configuration of 9,600 self-hosted 1.4GHz Intel Xeon Phi Processor 7250 based nodes. The system achieved a peak rate between 11.73 and 15.07 petaflops (single-precision) and an average sustained performance of 11.41 to 13.47 petaflops when training on physics and climate based data sets using Lawrence Berkeley National Laboratory’s (Berkeley Lab) NERSC (National Energy Research Scientific Computing Center) Cori Phase-II supercomputer (see https://www.hpcwire.com/2017/08/28/nersc-scales-deep-learning15-pflops/ )

More information on Cray current portfolio for Deep Learning is available on the links below:

-

Cray® Urika®-GX agile analytics platform for CPU-based machine learning using Apache®Spark™ and Cray Graph Engine

-

Cray® CS-Storm™ accelerated cluster supercomputer for organizations leveraging NVIDIA GPU accelerators in production-use machine learning

-

Cray® XC50™ supercomputer for GPU deep learning neural networks

-

Cray XC series supercomputers with Urika-XC analytics for organizations using Apache Spark, Python-based Open Data Science, and the BigDL distributed deep learning library

Are you interested in attending one of our Cray Deep Learning Days with the NVIDIA Deep Learning Institute?

Our November workshops in November will be in Basel and Bristol, and we’ll offer Cray Deep Learning Days in France starting early in 2018, if you would like to have further information please send an email to: pisani@cray.com

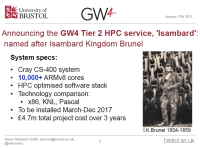

Cray GW4 Isambard ARM Cluster overview - Image courtesy of Simon McIntosh Smith

Cray is going to build what will looks to be the world’s first ARM-based supercomputer.

The system, known as “Isambard,” will be the basis of a new UK-based HPC service that will offer the machine as a platform to support scientific research and to evaluate ARM technologies for high performance computing. Installation of Isambard is scheduled to begin in March and be up and running before the end of the year. Key Isambard specifications include:

- 10,000+ ARMv8 64-bit cores

- Cray CS-400 format

- Cray HPC software stack

- EDR IB interconnect‘

This is an exciting time in high-performance computing,’ said Prof Simon McIntosh-Smith, leader of the project and Professor of High Performance Computing at the University of Bristol. ‘Scientists have a growing choice of potential computer architectures to choose from, including new 64-bit ARM CPUs, graphics processors, and many-core CPUs from Intel. Choosing the best architecture for an application can be a difficult task, so the new Isambard GW4 Tier 2 HPC service aims to provide access to a wide range of the most promising emerging architectures, all using the same software stack. Isambard is a unique system that will enable direct ‘apples-to-apples’ comparisons across architectures, thus enabling UK scientists to better understand which architecture best suits their application.’

Cray Inc. today announced the results of a deep learning collaboration between Cray, Microsoft, and the Swiss National Supercomputing Centre (CSCS) that expands the horizons of running deep learning algorithms at scale using the power of Cray supercomputers.

Running larger deep learning models is a path to new scientific possibilities, but conventional systems and architectures limit the problems that can be addressed, as models take too long to train. Cray worked with Microsoft and CSCS, a world-class scientific computing center, to leverage their decades of high performance computing expertise to profoundly scale the Microsoft Cognitive Toolkit (formerly CNTK) on a Cray® XC50™ supercomputer at CSCS nicknamed “Piz Daint”.

“Applying a supercomputing approach to optimize deep learning workloads represents a powerful breakthrough for training and evaluating deep learning algorithms at scale,” said Dr. Xuedong Huang, distinguished engineer, Microsoft AI and Research. “Our collaboration with Cray and CSCS has demonstrated how the Microsoft Cognitive Toolkit can be used to push the boundaries of deep learning.

![]() http://investors.cray.com/phoenix.zhtml?c=98390&p=irol-newsArticle&ID=2228098

http://investors.cray.com/phoenix.zhtml?c=98390&p=irol-newsArticle&ID=2228098

![]() http://www.cray.com/machine-learning

http://www.cray.com/machine-learning

Cray XC50 Supercomputer v2

17 Novembre 2016 -

Cray launches next-generation supercomputer

At the 2016 Supercomputing Conference in Salt Lake City, Utah, global supercomputer leader Cray Inc. (Nasdaq:CRAY) today announced the launch of the Cray® XC50™ supercomputer – the company’s fastest supercomputer ever with a peak performance of one petaflop in a single cabinet.

The Cray XC50 supercomputer is engineered to address the broad application performance challenges of today’s most demanding high performance computing (HPC) users. Special features of the Cray XC50 supercomputer include:

the industry-leading Aries network interconnect, which is designed specifically to meet the performance requirements seen in today’s emerging class of data center GPU accelerated applications, where high node-to-node communication performance is critical;

- a Dragonfly network topology tightly integrated with Aries that reduces communication latency for scale-out applications that rely heavily on the Message Passing Interface;

- optional SSD-enabled DataWarp™ I/O accelerator technology, enabling software-defined provisioning of application data for improved performance;

- innovative cooling systems to lower customers’ total cost of ownership;

- the next-generation of the high performance and tightly integrated Cray Linux Environment that supports a wide range of applications;

- image-based systems management for easy upgrades, less downtime, and field-tested large-scale system deployment;

- enhancements to Cray’s HPC optimized programming environment for improved performance and programmability of GPU environments;

- support for the NVIDIA® Tesla® P100 GPU accelerator as well as support for next-generation Intel® Xeon® and Intel Xeon Phi™ processors.

Located in Lugano, Switzerland, CSCS is currently upgrading its Cray XC30™ supercomputer nick-named “Piz Daint” to a Cray XC50 system, and will combine it with the Centre’s Cray XC40™ supercomputer nick-named “Piz Dora”. Once completed, the combined system will adopt the Piz Daint name and will be one of the fastest supercomputers in the world.

Find out more:

http://www.cray.com/products/computing/xc-series

http://investors.cray.com/phoenix.zhtml?c=98390&p=irol-newsArticle&ID=2221937

PGS Titan seismic vessel - image courtesy of PGS

Cray Inc. today announced that PGS has significantly enhanced its supercomputing capabilities with the purchase of a new 12-cabinet Cray® XC™ supercomputer. PGS, a worldwide oil-and-gas company and a leader in marine seismology, also added an additional 2.8 petabytes of capacity to its Cray® Sonexion® storage system.

Named “Galois” after the French mathematician Évariste Galois, the new Cray XC supercomputer at PGS will analyze seismic data to produce more accurate images and multi-dimensional models of the Earth’s subsurface beneath the ocean floor. The new system will allow PGS to run larger jobs with more complex data and algorithms that will produce higher-quality images in less time. With the expanded supercomputing capabilities, PGS can give its customers the ability to better locate oil and gas deposits.

More Information:

![]() http://investors.cray.com/phoenix.zhtml?c=98390&p=irol-newsArticle&ID=2212041

http://investors.cray.com/phoenix.zhtml?c=98390&p=irol-newsArticle&ID=2212041

![]() Machine Learning at Scale for Full Waveform Inversion at PGS

Machine Learning at Scale for Full Waveform Inversion at PGS

![]() ‘Shaheen II’ System Enables World’s First Trillion-Cell Simulation

‘Shaheen II’ System Enables World’s First Trillion-Cell Simulation

![]() Cray Inc. announces it has joined iEnergy community brokered by Halliburton Landmark

Cray Inc. announces it has joined iEnergy community brokered by Halliburton Landmark

Urika GX System

Analyzing an organization's vulnerability footprint from an adversary's perspective can enable proactive detection and efficient mitigation of cyber threats

Deloitte Advisory Cyber Risk Services and Cray Inc. (Nasdaq: CRAY), the global supercomputing leader, introduced today the first commercially available high-speed, supercomputing threat analytics service, Cyber Reconnaissance and Analytics. The subscription-based offering is designed to help organizations effectively discover, understand and take action to defend against cyber adversaries.

The threat intelligence reports generated through this service are already enabling clients, including the Department of Defense, to focus remediation teams by providing detailed, actionable information on potential threat vectors and identifying specific flows, network locations, times and events. "We are leveraging Deloitte Advisory's Cyber Reconnaissance service to enable large scale cyber data analytics to more proactively defend our networks and support critical national missions," said Clayton Jones, USPACOM J6 Senior Advisor and Strategic Cyber Integrator.

"What do you look like to your adversary?" commented Deborah Golden, federal cyber risk services leader for Deloitte Advisory and principal at Deloitte & Touche LLP. "Instead of reviewing every component of an agency's internal enterprise, we are trying to show what the adversary sees in order to give an organization a true 'risk profile.' This includes the ability to effectively prioritize internal budgets and resources, and facilitate a realistic approach toward assessing network defense effectiveness."

The Cyber Reconnaissance and Analytics service is powered by the Cray® Urika®-GX system – Cray's new agile analytics platform that fuses the Company's supercomputing technologies with an open, enterprise-ready software framework for big data analytics. The Cray Urika-GX system gives customers unprecedented versatility for running multiple analytics workloads concurrently on a single platform that exploits the speed of a Cray supercomputer.

Examples of Deloitte Advisory/Cray Cyber Reconnaissance Results:

- DISCOVERED 1,000+ exploits that could be leveraged to gain entry into client organizations

- IDENTIFIED 250+ companies in various organizational networks beaconing to the same Internet Relay Chat (IRC) servers, indicating malicious activity

- UNCOVERED privileged targets, including critical personnel and infrastructure

- LOCATED servers hosting malicious activity and suspicious traffic to web providers hosting illegal activities

- FOUND potential entry points for spear-phishing using social content from an online resume

More Information:

Deloitte Advisory Cyber Risk Services and Cray offer advanced Cyber Reconnaissance and Analytics Services

Cyber Reconnaissance and Analytics - Inverting the detection lens to preempt cyberthreats

|

|

.jpg) |

|

|

|

|

|

|

|

|

|

| December 8, 2015 -

You may have seen recent news items regarding the Human Brain Project (HBP), a ten-year European neuroscience research initiative. Interactive computer simulation of brain models is central to its success. Cray was recently awarded a contract for the third and final phase of an R&D program (known in the European Union as a Pre-Commercial Procurement or PCP) to deliver a pilot system on which interactive simulation and analysis techniques will be developed and tested. The Cray work is being undertaken by the newly launched Cray EMEA Research Lab. This article discusses the ideas being developed and tested, ideas that we expect to be useful to many Cray users. Step one is to manage the computer system in the same way other large pieces of experimental equipment are managed. Users book time slots on the experiment rather than submitting work to a queue. This can be achieved using a scheduler with an advance reservation system. An alternative that we have been working on for some time is to suspend the running jobs, either to memory or to fast swap space on a Cray® DataWarp™ filesystem. A number of Cray sites already use these techniques to run repetitive production cycles at the same time each day. By pushing these ideas a little further we can open up the possibility of using Cray supercomputer systems interactively. Memory capacity is a limiting factor in brain simulations. Researchers currently envision data sets that will require tens of petabytes of main memory, which already exceeds the capacities of the largest supercomputers on the planet. This requirement will then increase to hundreds of petabytes for a full brain-scale simulation! In short, it will be impossible to store all this data in memory at one time. One solution could be that users will interactively select the most interesting data to analyse and visualize, and a small subset of results to store. The simulation codes use so-called “recording devices” to store a selection of results and pass them on for further analysis. The simulation is started with default recording settings. If the initial analysis reveals interesting behaviour, then the experiment can be extended or repeated with detailed recording enabled for a subset of data objects. Step two is to provide developers with the ability to couple simulation, analysis and visualization applications into a single workflow. We are all accustomed to HPC flows in which simulation jobs write their results, either periodically or at the end of the job, and post-processing jobs analyse the data generated. In a coupled workflow the simulation and analytics applications run simultaneously. An analysis job might run on dedicated resources or it might run on the same nodes as the simulation, feeding its results to a visualisation system. Both techniques require a fast method for transferring data between applications and efficient methods of synchronization. The pilot system will have a pool of GPU nodes dedicated to this task. The third and final element of the HBP work is the ability to steer simulations as they run. This is more a way of thinking about how to perform simulation than a specific technology. The experiment is set up by constructing the brain network wiring in memory, a time-consuming process that results in more than a petabyte of data even with today’s relatively small models. This network is retained in memory while the scientist runs a sequence of virtual experiments or “what if” studies in quick succession, with the results of one run steering the next. Steering data can be fed back to the simulation through socket connections or through the filesystem. In addition to novel software, the HBP pilot system will preview a number of interesting memory and processor technologies. Check back at the Cray blog for updates on this and other advances at Cray. |

December 8, 2015 - In October 2015 Cray announced the creation of the Cray Europe, Middle East and Africa Research Lab (CERL) based out of Cray's EMEA headquarters in Bristol, England. Our investment in Europe is not new (the Cray®-1 and every machine since found a European home), but an explicit focus on research is a big and bold move for our company. The lab positions Cray to work with new and existing customers on various projects such as special research and development initiatives, the co-design of customer-specific technology solutions and collaborative joint research projects with a wide array of organizations. The research lab acts as Cray's main interface to European development programs, such as Horizon 2020 and the FET Flagship Initiatives. The Cray EMEA Research Lab is currently engaging with a number of supercomputing centers on important research projects. Cray is working with the Jülich Supercomputing Centre in Germany to deliver a pilot supercomputing system for the Human Brain Project. Cray is also collaborating on various initiatives with other organizations such as the Edinburgh Parallel Computing Centre at the University of Edinburgh in the United Kingdom, the Alan Turing Institute in London and the Swiss National Supercomputing Centre in Lugano, Switzerland. Cray’s new lab will be 100 percent focused on such collaborative R&D and codesign efforts, providing a single point of contact and a pooling of expertise. Perhaps the strongest motivation for CERL lay in the difficulty in designing future hardware and software solutions. Current hardware trends indicate severe disruption in both future hardware and software, though the exact form of those changes is unknown. For example, the standard HPC programming model is widely considered to be insufficient for exascale system programming, though there is no consensus on its replacement. CERL researchers are investigating new technologies for high-level programming of supercomputers such as DSLs, Python and Chapel. We are also investigating new abstractions for data layout optimization and auto-parallelism, efficient usage of new memory technology (such as high-bandwidth and non-volatile memories), software infrastructures for interactive computing and programming models for High-Performance Data Analytics. These projects are worlds away from being mature products. The intent is that these collaborative projects help guide and shape Cray’s actual R&D programs so that future products tackle the deep technical challenges our customers are anticipating. The European funding scenario has never been more vital, and with CERL we believe that Cray is well positioned to enter joint funding proposals in high-profile programs such as Horizon2020. That will certainly open new collaborations and partnerships for Cray. |

| December 8, 2015 -

Global supercomputer leader Cray Inc. has been awarded a contract to provide a Cray® XC40™ supercomputer to the Interdisciplinary Centre for Mathematical and Computational Modelling (ICM) at the University of Warsaw in Poland. The six-cabinet Cray XC40 system will be located in ICM's OCEAN research data center, and will enable interdisciplinary teams of scientists to address the most computationally complex challenges in areas such as life sciences, physics, cosmology, chemistry, environmental science, engineering, and the humanities. ICM is Poland's leading research center for computational and data driven sciences, and is one of the premier centers for large-scale high performance computing (HPC) simulations and big data analytics in Central and Eastern Europe. "The new Cray XC40 substantially increases our HPC capabilities," said Professor Marek Niezgódka, managing director of ICM. "Contemporary science is undergoing a paradigm shift towards data-intensive scientific discovery. The unprecedented availability of large quantities of data, new algorithms and methods of analysis has created new opportunities for us, as well as new challenges. By selecting the Cray XC40 as our next generation compute platform, we're investing in our ability to conduct cutting edge research for years to come." |

| December 8, 2015 -

Global supercomputer leader Cray Inc. has been awarded a contract to provide the Alfred Wegener Institute, Helmholtz Centre for Polar and Marine Research (AWI) with a Cray® CS400™ cluster supercomputer. Headquartered in Bremerhaven, Germany, AWI is one of the country's premier research institutes within the Helmholtz Association, and is an internationally respected center of expertise for polar and marine research. The contract with AWI is the Company's first for a Cray® CS™ cluster supercomputer featuring the new Intel® Omni-Path Architecture. The system will also include next-generation Intel® Xeon® processors, which are the follow on to the Intel® Xeon® processor E5-2600 v3 product family, formerly code-named "Haswell." "The new Cray HPC System will become the most innovative part of the AWI computing infrastructure and will further strengthen the position of our science and research activities," said Prof. Dr. Wolfgang Hiller, head of the AWI computing centre. "It will enable researchers at our institute to model the earth system and its components more efficiently with higher resolution and accuracy. It is also a new landmark in the long cooperation of the AWI computing centre with Cray, reaching back to the days when a Cray T3E system was one of our major work horses. That system led to major scientific results in modelling and understanding the Antarctic circumpolar current and the exchange of water masses between the world oceans in the Antarctic. Hence, we are looking forward to new exiting scientific research results which will be produced with the new system." |

| December 8, 2015 -

AUSTIN, Tex., Nov. 17 — GCS member centre High Performance Computing Center of the University of Stuttgart (HLRS) announced that it has received top honours in the 2015 HPCwire Readers’ and Editors’ Choice Awards, which were presented at the 2015 International Conference for High Performance Computing, Networking, Storage and Analysis (SC15) in Austin, Texas. HLRS has been recognized as HPCwire Readers’ Choice in the category “Best Use of HPC in Automotive” for a project executed by HLRS in cooperation with data storage supplier DataDirect Networks (DDN) and the Automotive Simulation Center Stuttgart (ASCS). |

| December 8, 2015 -

At the 2015 Supercomputing Conference in Austin, Texas, global supercomputer leader Cray Inc. announced the Company won six awards from the readers and editors of HPCwire, as part of the publication’s 2015 Readers’ and Editors’ Choice Awards. This marks the 12th consecutive year Cray has been selected for multiple HPCwire awards.

|

December 8, 2015 - Global supercomputer leader Cray Inc announced the Company plans to join the OpenHPC Project led by The Linux Foundation. Cray's participation in OpenHPC will focus on making technology contributions that will help to standardize software stack components, leverage open-source technologies, and simplify the maintenance and operation of the software stack for end-users. The OpenHPC Project is designed to create a unified community of key stakeholders across the HPC industry focused on developing a new open-source framework for high performance computing (HPC) environments. The group's goal is to create a stable environment for testing and validation, as well as provide a robust and diverse open-source software stack that minimizes user overhead and associated costs. "Cray is committed to providing our customers with the highest performing supercomputing systems, while simultaneously embracing the collaborative creation of industry standards that streamline our customers' workflow," said Steve Scott, Cray's senior vice president and chief technology officer. "We believe OpenHPC will deliver efficiency benefits to supercomputing center administrators and programmers, as well as the researchers and scientists that use our systems every day. The open-source community continues to play an important role for Cray, and as part of this effort we plan to open-source components of our industry-leading software environment to OpenHPC."

|

| September 8, 2015 -

In June Cray announced the establishment of its European, Middle East and Africa (EMEA) headquarters at the Company's new office in Bristol, United Kingdom. Cray's new EMEA headquarters will serve as a regional base for its EMEA sales, service, training and operations, and as an important development site for worldwide R&D initiatives. The new headquarters will also provide the company with a centralized location for business engagements with new and existing customers. - For further information |

| September 8, 2015 -

|

| September 8, 2015 -

In November 2014 Cray was awarded a contract to provide King Abdullah University of Science and Technology (KAUST) in Saudi Arabia with multiple Cray systems that span the Company's line of compute, storage and analytics products. The Cray XC40 system at KAUST, named "Shaheen II," 25 times more powerful than its previous system and it was awarded the regional award at the top500 announced at ISC’15 as the fastest system in the Middle East: The only new entry in the Top 10 supercomputers on the latest list at No. 7 Shaheen II achieved 5.536 petaflop/s on the Linpack benchmark, making it the highest-ranked Middle East system in the 22-year history of the list and the first to crack the Top 10. - Source

Current survey and seismic simulation techniques involve incredibly large datasets and complex algorithms that face limits when run on commodity clusters. The oil and gas industry must find ways to use more data more effectively and make better decisions from their analyses. This has resulted in a huge influx of information entering into simulations, modelling and other supercomputing tasks. At Cray, we noticed the importance of data-intensive analysis processes years ago and we’ve been developing solutions that are not built around traditional operating models and instead use innovative techniques to maximize operational efficiency. Specifically, our HPC systems go beyond adding raw power to operations and instead focus on moving data between supercomputing nodes efficiently. Traditional computational and I/O techniques can be replaced by methods focused on improved interconnect and storage capabilities – alongside traditional computing functionality – to help oil and gas companies stay ahead of the competition. We’ve also designed systems that incorporate GPUs and coprocessors, alternatives to traditional multicore CPUs, which can be leveraged to run today’s most demanding seismic processing workflows. Recently Cray was awarded a significant contract to provide Petroleum Geo-Services (PGS), a global oil-and-gas company, with a Cray® XC40™ supercomputer and a Cray® Sonexion® 2000 storage system. The five-petaflop Cray XC40 supercomputer and Sonexion storage system provides PGS with the advanced computational capabilities necessary to run highly-complex seismic processing and imaging applications. These applications include imaging algorithms for the PGS Triton survey, which is the most advanced seismic imaging survey ever conducted in the deep waters of the Gulf of Mexico. Guillaume Cambois, executive vice president imaging & engineering with PGS said: “With access to the greater compute efficiency and reliability of the Cray system, we can extract the full potential of our complex GeoStreamer imaging technologies, such as SWIM and CWI.”

Cray has just been awarded a $6 million contract to provide the Danish Meteorological Institute (DMI) with a Cray® XC™ supercomputer and a Cray® Sonexion® 2000 storage system. DMI has chosen to install the new Cray supercomputer and Sonexion storage system at the Icelandic Meteorological Office (IMO) datacenter in Reykjavik, Iceland for year-round power and cooling efficiency. In its final configuration, the new Cray XC supercomputer will be ten times more powerful than DMI's previous system, and will provide the Institute with the supercomputing resources needed to produce high quality numerical weather predictions within specified time intervals and with a high level of reliability. - For further information DMI is only the latest of a growing list of the world's leading meteorological centers which has chosen to run their complex, data-intensive climate and weather models on Cray supercomputers. Nicole Hemsoth from the Platform recently covered the reasons behind Cray’s strong market share in the weather and climate: “Weather forecasting centers need large, finely-tuned systems that are optimized around the limited, but complex codes these centers use for their production forecasts. […] they need architecture, system, software, and application-centric expertise—a set of demands that few supercomputing vendors have been able to fill to capture massive market share in the way Cray has managed to do over the last ten years” - Source One example of how applications see better scaling on Cray is the optimization work on the Met Office Unified Model (UM) on the Cray Supercomputer “ARCHER” which yielded up to 16% speedup. The researchers concluded that the investment in analysis and optimization resulted in performance gains that correspond to the saving of tens of millions of core hours on current climate projects. - For further information At Cray we understands the demands of weather and climate spanning to compute, analyze and store: Cray’s Tiered Adaptive Storage (TAS) is a flexible archival solution for Numerical Weather Prediction, which can help address the challenges NWP organizations are facing as high resolution forecasts and larger ensembles drive super-linear growth in data archives. If you are you concerned about the increasing growth of your data archives for weather and climate and want to talk to Cray experts contact us, our email is: Email: Veronique Selly

Improving Gas Turbine Performance with HiPSTAR HiPSTAR (HighPerformance Solver for Turbulence and Aeroacoustics Research) is a highly accurate structured multiblock compressible fluid dynamics code in curvilinear/cylindrical coordinates, written in Fortran. The University of Southampton and GE performed scaling tests of HiPSTAR on Cray Supercomputer ARCHER and on a single-block test problem with a total of 1.3 x 109 collocation points this has already showed good scaling up to 36,864 cores. Read more ANSYS® Fluent® Helps Reduce Vehicle Wind Noise Solving this demanding problem in a meaningful time frame requires a large number of compute cores. ANSYS Fluent is designed to take advantage of multiple cores using its MPI-based parallel implementation. Efficiently executing in parallel across a large number of cores depends on moving large amounts of data between the cores. The Cray XC40 system is designed to maximize the performance of interprocessor communication, allowing the cores to spend their time computing. The Aries interconnect with its low latency, high bandwidth and adaptive routing, allows effective scaling for demanding simulations regardless of other jobs running on the system. Read more Trends in Crash Simulations |

| December 1st, 2014 -

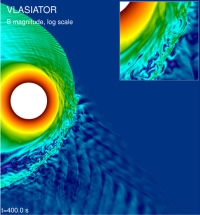

Using a Cray® XC30™ supercomputer featuring Intel Xeon E5 “Haswell” processors and hosted by the Finnish IT Center for Science Ltd. (CSC), researchers were able to run Vlasiator on an unprecedented 40,000 cores.

Film production company Studio 100 wanted to create a full-length feature film version of the famous Maya the Bee character in 3-D. For M.A.R.K.13, the animation studio doing the technical work, the task required calculating each of the CGI-stereoscopic film’s 150,000 images twice — once for the perspective of the left and once for the right eye. Additionally, the film’s detail-rich setting featuring lots of grass, dew drops, sunlight and transparent insect wings increased the computational complexity. Completing the task using the standard PC computing resources available was impossible within the company’s timeframe and budget.

The system is normally used by researchers at the University of Stuttgart in Germany and throughout Europe, as well as by industrial companies for research and development efforts. |

December 1st, 2014 - At the end of August Cray announced the new CS-STORM cluster, a dense, accelerated cluster supercomputer that offers 250 teraflops in one rack. In the latest top500 list CS-STORM features as a new entry at number 10 with a 3.57 petaflop/s system installed at U.S. government site. CS-Storm proved how powerful and yet energy efficient it is by featuring at number 4, and that 3 out of top 10 greenest are Cray systems, both Clusters and supercomputers. Since then Cray has announced new products for computing, storing and analyzing: |

| December 1st, 2014 -

The Cray® XC40™ supercomputer is a massively parallel processing (MPP) architecture that leverages the combined advantages of next-generation Aries interconnect and Dragonfly network topology, Intel® Xeon® processors, integrated storage solutions and major enhancements to the Cray OS and programming environment. As part of the XC series, the XC40 is upgradable to 100 PF. An air-cooled option, the XC40-AC system, provides slightly smaller and less dense supercomputing cabinets with no requirement for liquid coolants or extra blower cabinets. The XC40 system is targeted at scientists, researchers, engineers, analysts and students across the technology, science, industry and academic fields.

The Cray® CS400-AC™ system is our air-cooled cluster supercomputer. Designed to offer the widest possible choice of configurations, it is a modular, highly scalable platform based on the latest x86 processing, coprocessing and accelerator technologies from Intel and NVIDIA. Industry standards-based server nodes and components have been optimized for HPC workloads and paired with a comprehensive HPC software stack, creating a unified system that excels at capacity- and data-intensive workloads. At the system level, the CS400-AC cluster is built using blades or rackmount servers. The Cray® GreenBlade™ platform consists of server blades aggregated into chassis. The platform is designed to provide mix-and-match building blocks for easy, flexible configuration at the node, chassis and whole-system level.

The Cray® CS400™ cluster supercomputer series is industry standards-based, highly customizable and designed to handle the broadest range of medium- to large-scale simulation and data analysis workloads. The CS400 cluster system is capable of scaling to over 11,000 compute nodes and 11 petaflops. Designed for significant energy savings, the CS400-LC™ system features liquid-cooling technology that uses heat exchangers instead of chillers to cool system components. Along with lowering operational costs, it also offers the latest x86 processor technologies from Intel. Industry-standard server nodes and components have been optimized for HPC and paired with a comprehensive HPC software stack. |

| December 1st, 2014 -

Cray’s Urika-XA™ extreme analytics platform is engineered for superior performance and cost efficiency on mission-critical analytics use cases. Pre-integrated with the industry-leading Hadoop® and Spark™ frameworks, yet versatile enough to serve analytics workloads of the future, the Urika-XA platform provides a turnkey analytics environment that enables organizations to extract valuable business insights. Optimized for compute-heavy, memory-centric analytics, the Urika-XA platform incorporates innovative use of memory-storage hierarchies, including SSDs for Hadoop and Spark acceleration, and fast interconnect, thus delivering excellent performance on emerging latency-sensitive applications.

|

| December 1st, 2014 -

The Cray® Sonexion® 2000 scale-out Lustre® storage system helps you simplify, scale and protect your data for HPC and supercomputing, rapidly delivering precision performance at scale with the fewest number of components. The system stores over 2 petabytes in a single rack and scales from 7 GB/s to 45 GB/s in a single rack — and over 1.7 TB/s in a single Lustre file system. The system now includes a new type of software-based data protection system called GridRAID, which accelerates rebuilds to up to 3.5 times faster than conventional hardware RAID.

|

| December 1st, 2014 -

At the 2014 Supercomputing Conference in New Orleans, Louisiana, global supercomputer leader Cray Inc. (NASDAQ: CRAY) announced the Company won six awards from the readers and editors of HPCwire, as part of the publication's 2014 Readers' and Editors' Choice Awards. This marks the 11th consecutive year Cray has been selected for multiple HPCwire awards. |

| December 1st, 2014 -

Green500: Piz Daint is Number 9 position in the Green500 list |

| December 1st, 2014 -

Global supercomputer leader Cray Inc. (NASDAQ: CRAY) today announced the Company has been awarded a contract to provide the Met Office in the United Kingdom with multiple Cray® XC™ supercomputers and Cray® Sonexion® storage systems. Consisting of three phases spanning multiple years, the $128 million contract expands Cray's significant presence in the global weather and climate community, and is the largest supercomputer contract ever for Cray outside of the United States. |

| September 5, 2014 - Focus on several R&D Projects

CRESTA (Collaborative Research into Exascale Systemware, Tools & Applications) is a collaborative research effort funded by the European Union exploring how to meet the exaflop challenge. A key enabler of CRESTA’s work is access to the many large Cray supercomputers installed at the CRESTA partner sites in Europe. Below are some recent examples of customer projects that benefited from the CRESTA partnerships :

ECMWF uses the Integrated Forecast System (IFS) model to provide medium-range weather forecasts to its 34 European member states. Today’s simulations use a global grid with a 16km resolution, but ECMWF expects to reduce this to a 2.5km global weather forecast model by 2030 using an exascale-sized system. To achieve this, IFS needs to run efficiently on a thousand times more cores. The CRESTA improvements have already enabled IFS to use over 200,000 CPU cores on Titan. This is the largest number ever used for an operational weather forecasting code and represents the first use of the pre-exascale 5km resolution model that will be needed in medium-range forecasts in 2023. This breakthrough came from using new programming models to eliminate a performance bottleneck. For the first time, the Cray Compiler Environment (CCE) was used to nest Fortran coarrays within OpenMP, absorbing communication time into existing calculations. For more information: http://www.cray.com/Assets/PDF/products/xc/XC30-ECMWF-IFS-0614.pdf

The HemeLB research group at University College London (UCL) develops software to model intracranial blood flow, investigating blood flow patterns and the formation process of aneurysm. The team use ARCHER, the U.K.’s flagship Cray XC30 system with 3,008 nodes and a system total of 72,192 cores, to accelerate its science. A sophisticated communication approach based on MPI allows HemeLB to be run on large supercomputers, and therefore the project fits perfectly into CRESTA, as it stimulates the development of technologies leading to the next generation of supercomputers at the exascale. The HemeLB application crashed when using 50,000 processor cores. Allinea DDT parallel debugger handled debugging all 50,000 application processes simultaneously and getting HemeLB to scale to 50,000 ARCHER cores. Afterwards Allinea Software’s profiling tool, Allinea MAP, lead to an adjustment to avoid an I/O bottleneck, enabling the application to scale successfully – and improving performance on those cases by over 25 percent. For more information: http://www.cray.com/Assets/PDF/products/xc/Case-Study-Allinea-Brain-Surgery.pdf

CRESTA members Cray and the Technische Universität Dresden presented at CUG14 a paper titled “User-level Power Monitoring and Application Performance on Cray XC30 Supercomputers”, which was runner-up for best paper at the conference. The study is a good example of how to begin a meaningful co-design process for energy efficient exascale supercomputers and applications. New power measurement and control features introduced in the Cray XC supercomputer range for both system administrators and users can be utilized to monitor energy consumption, both for complete jobs and also for application phases. This information can then be used to investigate energy efficient application placement options on Cray XC30 architectures, including mixed use of both CPU and GPU on accelerated nodes and interleaving processes from multiple applications on the same node. Conference Proceedings are available at the CUG site but if you would like to know more about the subject please send us an email to crayinfo@cray.com.

|

September 5, 2014 - The Cray CS-Storm cluster is an accelerator-optimized system that consists of multiple high-density multi-GPU server nodes, designed for massively parallel computing workloads. Each CS-Storm cluster rack holds up to 22 2U rackmount CS-Storm server nodes. Each server integrates eight accelerators and two Intel® Xeon® processors, delivering 246 GPU teraflops of compute performance in one 48U rack. The system is available with a comprehensive HPC software stack including tools that are customizable to work with most open-source and commercial compilers, schedulers and libraries. The CS-Storm system provides performance advantages for users who collect and process massive amounts of data from diverse sources such as satellite images, surveillance cameras, financial markets and seismic processing. It is well suited for HPC workloads in the defense, oil and gas, media and entertainment, life sciences and business intelligence sectors. Typical applications include cybersecurity, geospatial intelligence, pattern recognition, seismic processing, rendering and machine learning. For more information, see http://www.cray.com/Products/Computing/CS/Optimized_Solutions/CS-

|