|

||

Home > Activities > R&D Projects > Coloc

|

A key result from the research work carried out by INRIA Bordeaux in the framework of this project is an advanced version of the Hwloc-Netloc software which now allows to model complex HPC infrastructures (compute nodes and switches). Such model enables to visualise the topology of very large platforms such as the GENCI cluster CURIE (5000 compute nodes and 800 switches) or Thianhe-2 (18432 compute nodes and 577 switches). Moreover, an API has been developed to provide information about this topology to Resource Management tools such as SLURM (which has been adapted by Bull) and Task Scheduling tools such as OpenMPI. Through the knowledge acquired about the volume of data exchanged between tasks/processes (communication matrix), the tools can optimise the process placement, thereby reduce the cost of data transfers and optimize the application execution.

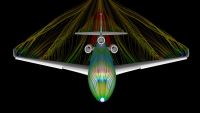

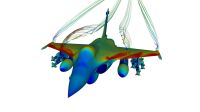

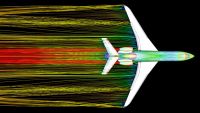

Another remarkable achievement is the ‘Divide & Conquer’ mechanism co-designed by UVSQ and Dassault-Aviation (DA) that allowed to solve data locality and task synchronisation problems, and thus greatly improve the scalability of the DA Aether CFD solver.

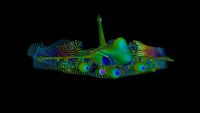

Moreover, the asynchronous implementation of data exchanges using GasPI (Global Address Space Programming Interface) enabled DA to divide by a factor of two to four the time taken by communications in the MLFMM (Multilevel Fast Multipole Method) algorithm in its proprietary CEM (Computational Electromagnetic) simulation software.

All these results have been demonstrated on industrial use cases, sometime pushing the current limits of simulation in DA.

Regarding the infrastructure, Exascale and Big Data challenges led the ATOS/Bull team to develop the Proxy-IO feature, a new mechanism to deal with massive inputs-outputs. This mechanism enables to reduce input-output processing on compute nodes, and thereby increases compute power. It also permits to aggregate, encrypt and compress data, to increase the scalability, and to reduce the recovery time following a failure.

Likewise, UVSQ enriched MAQAO, its performance analysis and optimisation tool that now enables to make more precise diagnoses regarding data access: impact of latency, impact of the out-of-order mechanisms, etc.

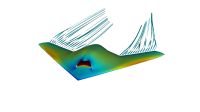

Besides, our Swedish partner ESI/Efield, that develops CEM simulation software, did also validate and could take advantage of advances described above which were all installed on the common platform of the project: Efield could, for instance, reduce the execution time by 60% of the MLFMM algorithm and multiply by 3 the size of the simulated element. Moreover, the application which could not run efficiently on more than 12 cores is now able to take advantage of a distributed architecture featuring 240 cores. Similar results were also obtained with other applications: ability to process three times larger problem size (up to 30M unknowns) with FEM (Finite Element Method) for simulating a large microwave oven for curing composites; reduction by 70% of the execution time of an FDTD (Finite-difference, time-domain) algorithm for antenna simulation with complex geometry and materials. All these improvements have been, or are about to be, integrated in the commercial release of the CEM One software which thus improves its competitiveness.

Another challenge posed by ITEA consisted in trying to deploy the results of the project to non-expert users of HPC.

Our Scilab partner, recently integrated into the ESI group, did it by developing a web Front-End that enables Scilab users to benefit from Scilab Cloud facilities without having to learn about HPC. By activating Scilab functions they can for instance activate CFD simulation software running on Scilab Cloud, take advantage of the MUMPS software (see mumps-solver.org) to solve complex problems, or execute computationally intensive functions on a powerful parallel infrastructure equipped with GPU using the SciCUDA facility.

In short, the COLOC project helped the project partners to make clear progress in data locality, supercomputer resource optimization, and parallel application efficiency. A further important step has been taken in solving some of the Exascale and Big Data challenges and in allowing more users to leverage the power of HPC.